Job

- Level

- Senior

- Job Field

- BI, Data

- Employment Type

- Full Time

- Contract Type

- Permanent employment

- Salary

- from 53.802 € Gross/Year

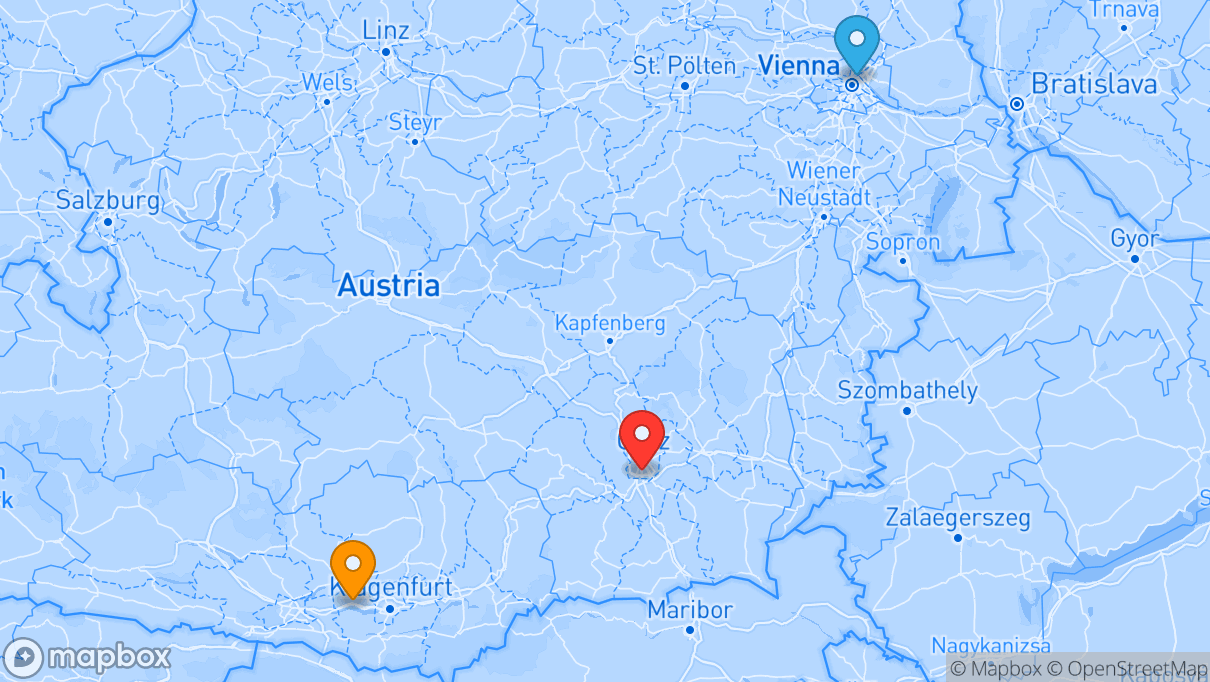

- Location

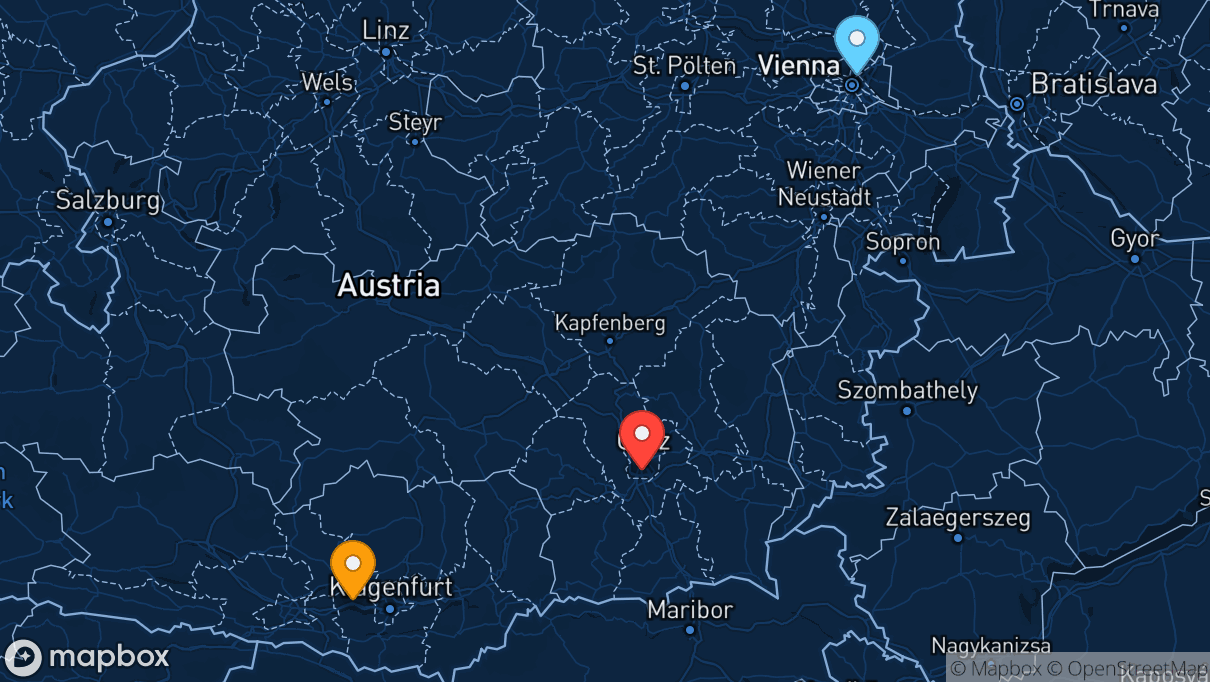

- Graz, Vienna, Pörtschach am Wörther See

- Working Model

- Hybrid, Onsite

Job Summary

In this role, you will design scalable data solutions, build efficient ETL processes, work hands-on with Databricks and Google BigQuery daily, and ensure data quality and integration with cloud services.

Job Technologies

Your role in the team

As a Senior Data Engineer, you’ll play a key role in building modern, scalable, and high-performance data solutions for our clients. You’ll be part of our growing Data & AI team and work hands-on with leading data platforms, supporting clients in solving complex data challenges.

Your Job’s Key Responsibilities Are:

- Designing, developing, and maintaining robust data pipelines using Databricks, Google BigQuery (Dataflow, Dataform or similar), Spark, and Python

- Building efficient and scalable ETL/ELT processes to ingest, transform, and load data from various sources (databases, APIs, streaming platforms) into cloud-based data lakes and warehouses

- Leveraging ecosystems such as Databricks (SQL, Delta Lake, Workflows, Unity Catalog) or Google BigQuery to deliver reliable and performant data workflows.

- Integrating with cloud services such as Azure, AWS, or GCP to enable secure, cost-effective data solutions.

- Contributing to data modeling and architecture decisions to ensure consistency, accessibility, and long-term maintainability of the data landscape.

- Ensuring data quality through validation processes and adherence to data governance policies

- Collaborating with data scientists and analysts to understand data needs and deliver actionable solutions

- Staying up to date with advancements in data engineering and cloud technologies to continuously improve our tools and approaches

This text has been machine translated. Show original

Our expectations of you

Qualifications

Essential Skills:

- 3+ years of hands-on experience as a Data Engineer working with either Databricks or Google BigQuery (or both), along with Apache Spark

- Strong programming skills in Python, with experience in data manipulation libraries (e.g., PySpark, Spark SQL)

- Solid understanding of data warehousing principles, ETL/ELT processes, data modeling and techniques, and database systems

- Proven experience with at least one major cloud platform (Azure, AWS, or GCP)

- Excellent SQL proficiency for data querying, transformation, and analysis

- Excellent communication and collaboration skills in English

- Ability to work independently as well as part of a team in an agile environment

Beneficial Skills:

- Understanding of data governance, data quality, and compliance best practices

- Experience with working in multi-cloud environments

- Familiarity with DevOps, Infrastructure-as-Code (e.g., Terraform), and CI/CD pipelines

- Interest or experience with machine learning or AI technologies

- Experience with data visualization tools such as Power BI or Looker

Experience

- 3+ years of hands-on experience as a Data Engineer working with Databricks and Apache Spark.

- Strong programming skills in Python, with experience in data manipulation libraries (e.g., PySpark, Spark SQL).

- Experience with core components of the Databricks ecosystem: Databricks Workflows, Unity Catalog, and Delta Live Tables.

- Proven experience with at least one major cloud platform (Azure, AWS, or GCP).

- Experience with working in multi-cloud environments.

- Interest or experience with machine learning or AI technologies.

- Experience with data visualization tools such as Power BI or Looker.

This text has been machine translated. Show original

Benefits

More net

- 🚙Poolcar

- 💻Company Notebook for Private Use

- 🛍Employee Discount

- 🎁Employee Gifts

- 📱Company Phone for Private Use

- 🚎Public Transport Allowance

Health, Fitness & Fun

- 👨🏻🎓Mentor Program

- ⚽️Tabletop Soccer, etc.

- 🧠Mental Health Care

- 👩⚕️Company Doctor

- 🎳Team Events

- 🚲Bicycle Parking Space

- 🙂Health Care Benefits

Work-Life-Integration

- 🕺No Dresscode

- 🧳Relocation Support

- 🅿️Employee Parking Space

- 🐕Animals Welcome

- 🏠Home Office

- ⏰Flexible Working Hours

- ⏸Educational Leave/Sabbatical

- 🚌Excellent Traffic Connections

Food & Drink

Job Locations

Topics that you deal with on the job

This is your employer

NETCONOMY

Graz, Madrid, Belgrade, Novi Sad, Pörtschach, Graz, Graz, Zürich, Wien, Dortmund, Amsterdam, Berlin

NETCONOMY is a leader in designing digital platforms and customer experience innovations, helping companies to identify and successfully capitalize on digital potential. Our flexible, scalable solutions are based on the latest technologies from SAP, Google Cloud, and Microsoft Azure. With over 20 years of experience and nearly 500 experts across Europe, we help our clients increase their innovation power and expand their core businesses into the digital world.

Description

- Company Size

- 250+ Employees

- Founding year

- 2000

- Language

- English

- Company Type

- Digital Agency

- Working Model

- Hybrid

- Industry

- Internet, IT, Telecommunication

Dev Reviews

by devworkplaces.com

Total

(2 Reviews)Engineering

3.8Career Growth

3.7Culture

4.5Workingconditions

4.2